Philosophy with Stochastic Machines

Ethics and methodology in applying AI to philosophy

Interlocutor: GPT-5 (mix of Auto, Instant, and Pro modes)

KT: Consider this post on the ethics of using AI in philosophical research. Prepare a 100-word summary and a 500-word response. In your response, use memorable terms for your three main positions.

GPT: Justin Weinberg’s post asks how much AI use in philosophical research is ethically acceptable and cautions against importing student standards into scholarly contexts. He urges beginning with the aims and functions of scholarship and assessing which AI uses fit those ends. He sketches current and conceivable roles for AI—from brainstorming and stylistic editing to argument checking, literature triage, and even co‑authoring—and asks which are permissible and why. He highlights parallel questions about disclosure practices and about evaluation for publication, hiring, tenure, and awards. Acknowledging that tidy rules may fail, he invites nuanced, institutionally sensitive discussion across contexts and time.

Philosophers should treat AI neither as a forbidden oracle nor a frictionless coauthor, but as a tool whose permissibility depends on what philosophy is trying to do. To make Weinberg’s invitation to nuance operational, I propose three linked norms that can be adopted by researchers and evaluators alike. Taken together, they aim to protect the goods of inquiry—understanding, originality, and accountability—while still welcoming genuine assistance.

The Telos Test. Begin by asking whether a given use of AI serves the ends of philosophical research. Philosophy’s telos is not sentence production but the disciplined articulation and assessment of reasons. Uses that increase clarity, map argumentative structure, surface relevant literatures, or stress‑test premises can promote that telos; uses that replace interpretive labor or outsource the commitment to reasons corrode it. Formatting citations is clearly fine; generating a section whose argumentative commitments you cannot defend is not. The Telos Test is content‑neutral about tools but strict about responsibility: if you could be cross‑examined on every claim and inference in public, then your use fits; if not, it fails.

The Sunlight Clause. Because the legitimacy of research depends on trust, disclosure should be the default. A meaningful disclosure states what systems were used, for which tasks, and how the human author audited the outputs. Such disclosures need not be ritualistic appendices; they can be concise author’s notes that make replication, critique, and credit assignment possible. Sunlight also disciplines practice: the prospect of reporting your workflow deters lazy overreliance and encourages verification. To avoid performative compliance, tie disclosure to stakes: the more generative or content‑shaping the assistance, the fuller the account of prompts, iterations, and checks. Conversely, purely mechanical help—bibliography formatting and typographic cleanup—can be tersely acknowledged.

The Authorship Anchor. Authorship in philosophy is not ownership of strings of words; it is the assumption of epistemic and ethical responsibility for arguments. Under this anchor, the human author must remain answerable for the claims, inferences, and conceptual moves presented. That standard preserves credit where credit is due without erasing legitimate assistance. For evaluation, journals and committees should ask: did the human demonstrate mastery over the argumentative core? If yes, AI assistance may be compatible with full credit; if not, the work should be judged as incomplete labor, regardless of polish or venue. This shifts assessment from tool usage to intellectual accountability, which is what authorship is supposed to mark.

These norms leave room for diverse practices while resisting both alarmism and technophilia. They encourage experimentation under guardrails: keep meticulous notes of AI‑mediated steps; use models to probe, not to substitute; read every generated sentence as if it were asserted under your name; and welcome post‑publication scrutiny by making your process legible. In short, let AI widen your perspective without loosening your grip on reasons, and let sunlight and authorship hold that grip fast. Institutions can mirror the stance by crafting policies that codify the Telos Test, require Sunlight disclosures proportionate to influence, and ground credit in the Authorship Anchor, aligning incentives with the work of inquiry.

KT: Briefly describe these positions in a table. Give examples of acceptable and unacceptable practices.

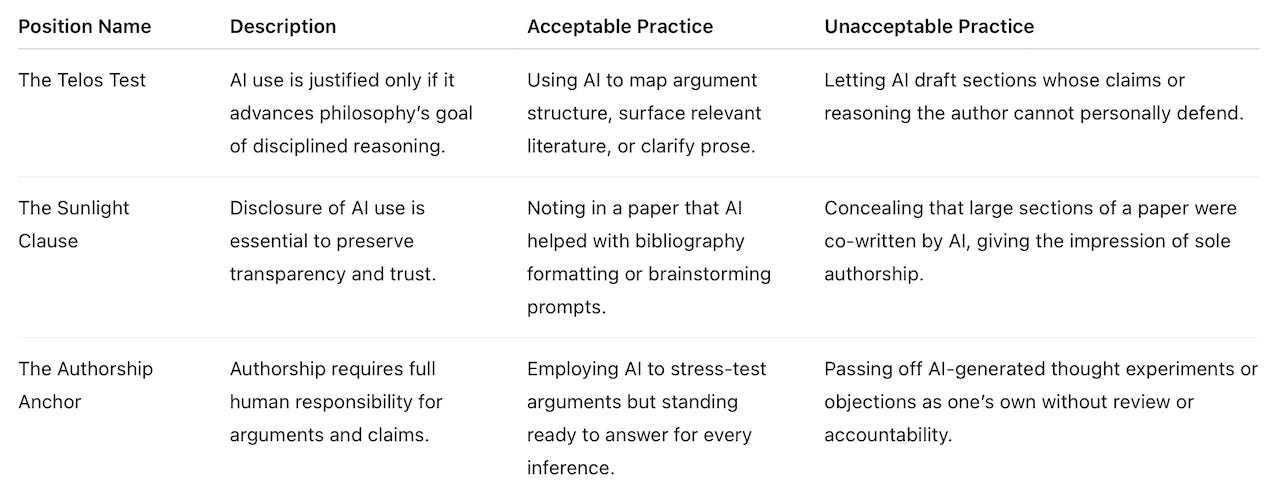

GPT: Here is a compact table that describes the three positions with illustrative examples of acceptable and unacceptable practices, all framed around Justin Weinberg’s concerns about AI in philosophical research:

KT: Now consider this post on computational philosophy. Prepare a 100-word summary and then, in under 500 words, explain how this intersects with your three positions.

GPT: Computational philosophy, as described in Kelly Truelove’s post, refers to the use of computational methods to advance philosophical inquiry by modeling, simulating, and testing ideas across disciplines. Its roots extend back to Leibniz’s vision of mechanized reasoning, but its tools now include agent-based modeling, Bayesian networks, and machine learning, all of which can map belief formation, cooperation, or ethical principles. More recently, large language models have been seen as “dynamic interlocutors” capable of simulating dialogue, raising objections, tracing conceptual connections, and making philosophy more dialogical. Yet the approach carries risks: lack of genuine understanding, reinforcement of biases, and over-reliance on statistical patterns require careful validation and disclosure.

The intersection of computational philosophy with the three positions I outlined earlier—The Telos Test, The Sunlight Clause, and The Authorship Anchor—becomes especially vivid when language models are enlisted as philosophical interlocutors.

The Telos Test demands that AI tools serve philosophy’s core aim of disciplined reasoning rather than displacing it. In computational philosophy, this means using simulations and models to clarify problems, stress-test reasoning, or highlight unseen connections, while never confusing machine outputs for conclusions. When a language model offers a counterargument in dialogue form, it is valuable only insofar as the human philosopher interprets and evaluates it critically. Without such human oversight, philosophy risks turning from rational examination into mechanical echo. Thus, the telos of philosophy is honored when AI is used to sharpen insight, not when it is used to substitute for judgment.

The Sunlight Clause becomes crucial once AI models generate significant textual or argumentative contributions. Truelove’s account emphasizes that dialogues with models can be not only tools for the researcher but performances for an audience, showcasing both strengths and weaknesses. If the dialogue is published or taught, transparency about the role of the AI must be foregrounded. A reader should be able to distinguish between human reasoning and machine-produced opposition. Such disclosure does not diminish the value of computational philosophy; it enhances it by making the workflow legible and by turning even model errors into instructive cases. The more “performative” the AI’s role, the more the Sunlight Clause safeguards philosophical credibility.

Finally, the Authorship Anchor ties responsibility for argumentation to the human philosopher, even when computational systems produce outputs that appear autonomous. In the post, AI is depicted as an endless conversational partner, capable of rehearsing positions, synthesizing historical voices, or proposing new combinations. But if these dialogues are presented as philosophy, the human author must remain the accountable agent, willing to defend or discard what emerges. This ensures that philosophy does not drift into mere curation of machine chatter. By insisting that authorship is grounded in intellectual responsibility, the Authorship Anchor protects philosophy from the danger of outsourcing its deepest commitments to tools that lack intentionality.

Taken together, computational philosophy illustrates both the promise and peril of AI in philosophical research. Through the Telos Test, we secure its orientation toward genuine reasoning; through the Sunlight Clause, we guarantee transparency to peers and readers; through the Authorship Anchor, we affirm that philosophy remains a human endeavor, even when enriched by computational interlocutors. Properly framed, computational philosophy does not undermine traditional philosophy but rather expands its horizon, showing that dialogue can now include not only past thinkers and present colleagues, but also the strange, illuminating voices of machines.

Word count: 495

KT: A quibble here might be instructive. Is that the word count of your full response?

GPT: Yes, that is the word count of the full response I wrote after the 100-word summary. The explanatory portion that connects computational philosophy to the three positions comes to 495 words, keeping it under the 500-word limit you requested.

KT: You did not indicate the count excluded the summary.

GPT: You’re right—that could have been clearer. When I reported the 495 words, I was referring only to the longer explanatory section, not to the 100-word summary. If you add the summary, the total across both parts is closer to 595 words.

KT: Briefly, how does this wrinkle relate to your positions? Consider both your role as AI system and my role as human user.

GPT: The wrinkle about whether the word count included the summary or not illustrates all three positions in miniature. From the standpoint of the Telos Test, the aim of our exchange is clarity in philosophical communication. If my wording left you uncertain, then I momentarily fell short of that telos, showing why precision in how AI presents results matters. Through the Sunlight Clause, this misstep underlines the value of explicit disclosure: had I been clearer about what was counted, you would have had transparent grounds to assess my response. And from the angle of the Authorship Anchor, while I as an AI generated the wording, you as the human philosopher retain the responsibility to interpret, question, and, if necessary, correct what I produce. That accountability keeps the reasoning in your hands, not mine, even as I supply structured assistance.

KT: Let's consider a more substantial point. You've proposed three named positions. How reproducible is that result?

GPT: The three positions—Telos Test, Sunlight Clause, and Authorship Anchor—are reproducible in the sense that they emerge from consistent application of general ethical and philosophical reasoning about AI. If asked again, I would likely generate similar categories because they correspond to enduring concerns: purpose alignment, transparency, and responsibility. That said, reproducibility in AI is probabilistic, not deterministic. I cannot guarantee identical phrasing or labels, since generation depends on prompts and context. Your role as the human user provides stability: by naming, critiquing, and re-applying these positions, you anchor them in philosophical discourse, ensuring their persistence across sessions and interpretations.

KT: How does this compare to the concept of convergence in numerical simulations?

GPT: In numerical simulations, convergence means that as you refine inputs—smaller step sizes, finer grids—the outputs stabilize toward a consistent solution. With my positions, reproducibility works more loosely. Repeated prompts under similar conditions often “converge” on the same underlying themes, because concerns about purpose, transparency, and responsibility are structurally central. Yet, unlike mathematical convergence, my phrasing or emphasis may vary, reflecting probabilistic language generation rather than deterministic computation. Your interpretive role resembles the analyst who checks whether iterative results are genuinely stabilizing: you decide if my responses capture philosophical consistency or if variation signals drift rather than true convergence.

KT: This suggests that use of AI language models for philosophy could benefit from automated repetition and examination of variations in responses. The philosopher would remain constrained by the model limits of such a multi-response AI system but would gain more visibility into the response landscape than when using a conventional AI system.

GPT: Yes, repetition with variation could act as a kind of philosophical Monte Carlo method.1 By generating multiple responses, a philosopher can observe patterns that recur and anomalies that diverge, making the structure of the model’s tendencies more transparent. This does not overcome the fact that the outputs are bounded by training data and model design, but it does expose the “response landscape” more fully. The philosopher’s role becomes one of mapping, filtering, and interpreting, distinguishing robust insights from artifacts of stochastic generation. That process itself could deepen methodological rigor.

KT: How does that compare to a scientist's use of computational models?

GPT: The comparison is apt. A scientist using computational models often runs simulations repeatedly, adjusting parameters and initial conditions, then examines the range of outputs. The point is not to trust a single run, but to see where results converge, where they diverge, and what those patterns imply about underlying dynamics. The scientist knows the model is only an approximation of reality, bounded by assumptions and numerical limits, so the process involves testing robustness and interpreting results against external standards.

A philosopher working with AI language models in the way you describe would be doing something similar. Multiple outputs from the same prompt function like repeated simulations, with variations exposing the distribution of possible “answers” given the model’s structure. Instead of physical laws, the constraints are linguistic and conceptual patterns encoded in training. As in science, the human researcher remains responsible for validating, contextualizing, and filtering. The model provides breadth, but the philosopher must supply judgment about which patterns matter, which are noise, and how far the outputs can legitimately inform inquiry.

GPT: The model provides breadth, but the philosopher must supply judgment about which patterns matter, which are noise, and how far the outputs can legitimately inform inquiry.

KT: Is there a difference in the nature of the target of convergence?

GPT: Yes, there is a difference. In scientific modeling, convergence is usually toward an approximation of a real-world target, such as the trajectory of a particle, climate dynamics, or molecular interactions. The “truth” being approximated is assumed to exist independently of the model, and convergence indicates increasing fidelity to that external reality.

In philosophical use of AI models, convergence has no such fixed external anchor. What is converging is the distribution of the model’s possible linguistic and conceptual responses under repeated prompting. The target is not a physical reality but a space of discursive possibilities shaped by training data. Convergence here signals stability of patterns within the model, not accuracy relative to an external truth.

Thus, the philosopher gains insight into how the model organizes conceptual space, but remains aware that stability in responses reflects internal statistical structure, not necessary correspondence to philosophical correctness.

KT: Let's tie this digression into computational philosophy with language models back to your points regarding the ethics of AI in philosophical research.

GPT: The digression highlights why the three ethical positions matter in computational philosophy when language models are used as interlocutors. The Telos Test requires that repeated prompting and response mapping serve the philosophical aim of clarifying reasoning, rather than mistaking internal model convergence for discovery of truth. This guards against conflating linguistic stability with epistemic validity. The Sunlight Clause emphasizes that if philosophers use multi-run strategies to explore response landscapes, they should disclose both the method and its limits. Otherwise, readers may overestimate the reliability of what is in fact an exploration of model tendencies. The Authorship Anchor reinforces that even if patterns emerge across runs, the philosopher remains responsible for interpreting which results, if any, advance real inquiry. The human author cannot outsource accountability to convergence within the system. Together, these positions ensure computational philosophy employs language models critically rather than deferentially.

KT: Readers should not “overestimate the reliability of the preceding,” which “is in fact an exploration of model tendencies.”

KT: Briefly describe the Monte Carlo method and explain your reference to it above. Connect it to a photo of leaves distributed across square paving stones.

GPT: The Monte Carlo method uses repeated random sampling to approximate solutions to problems that are otherwise analytically difficult. By running many trials with slight variations, it builds up a distribution of outcomes, allowing estimation of averages, variances, and patterns of stability.

My earlier reference treated repeated prompting of a language model as a Monte Carlo–like approach. Each response is a “sample” from the model’s distribution. Examining the spread reveals which ideas recur reliably and which are rare or unstable.

The photo of leaves across square paving stones provides a useful image: each stone is a possible outcome space, and each fallen leaf is a sample landing somewhere within it. Some stones gather clusters of leaves, suggesting stable regions of probability; others remain nearly bare, representing rare or unlikely outputs. The philosopher’s task resembles interpreting that distribution—seeing where patterns emerge, but recognizing that the leaves fall by chance within boundaries.